- MTTReport

- Posts

- AI now negotiates seven-figure ransomware demands

AI now negotiates seven-figure ransomware demands

PLUS: The Pentagon's $200M AI deals and why AI can't be trusted

Evening, Cyber Security enthusiast!

A new ransomware group is deploying AI to handle victim negotiations, automating the process for demanding seven-figure ransoms. This development marks a chilling new application of AI in the cybercrime ecosystem.

The system automates communication to increase psychological pressure, allowing for more efficient and scalable attacks. How will security professionals adapt their incident response playbooks when the extortionist on the other side is a tireless, automated machine?

In today’s Cyber Security recap:

AI now negotiates seven-figure ransomware demands

The Pentagon's $200M AI defense deals

Why AI models can't be trusted

AsyncRAT forks lead to dangerous new malware

Your new negotiator is an AI

The Recap: A new ransomware operation named GLOBAL GROUP is using an AI-powered panel to automate victim negotiations and escalate seven-figure ransom demands. This development marks a chilling new application of AI in the cybercrime ecosystem.

Unpacked:

The group's AI system automates communication to increase psychological pressure during negotiations, enabling even non-English speaking affiliates to manage high-stakes extortion.

Operating with an attractive 85% revenue-sharing model, GLOBAL GROUP provides affiliates a mobile-friendly panel to manage attacks and supports ransomware builds across Windows, Linux, and macOS.

The group accelerates its attacks by partnering with Initial Access Brokers to buy pre-compromised network access, allowing them to bypass initial infiltration and focus directly on deploying ransomware.

Bottom line: This AI-driven approach provides a blueprint for cybercriminals to launch more efficient and scalable attacks with less manual effort. Security professionals must now adapt defenses to counter automated threats that can operate 24/7 without human fatigue.

The Pentagon's AI Power Play

The Recap: The U.S. Department of Defense is awarding contracts of up to $200 million each to Google, OpenAI, Anthropic, and Elon Musk's xAI. The move aims to bolster national security by accelerating the integration of advanced AI into military applications.

Unpacked:

The DOD is making significant investments across the board, granting contracts of up to $200 million each to leading firms Google, OpenAI, Anthropic, and xAI.

Elon Musk's xAI, despite recent controversy over its Grok chatbot, is a notable partner and has announced its "Grok for Government" suite to serve federal and national security clients.

This builds on an existing trend, as OpenAI already works with the DOD, and other tech giants like Meta are developing military tech to maintain a strategic advantage.

Bottom line: These partnerships solidify the deep integration of commercial AI into the U.S. defense apparatus. The race for AI superiority is now a central pillar of modern national security strategy.

AI's Crisis of Trust

The Recap: Top U.S. and international security agencies are sounding the alarm on a new vector of attack: AI data poisoning. A joint bulletin from the NSA, CISA, and FBI warns that adversaries are actively corrupting the data that trains AI models, creating a fundamental crisis of trust in automated systems.

Unpacked:

Unlike traditional cyberattacks that cause systems to fail, data poisoning subtly distorts an AI's perception of reality, leading it to make dangerously flawed decisions without triggering obvious alerts.

This threat is already in the wild, with foundation models found to be parroting Kremlin-aligned propaganda after ingesting content seeded by a Russian disinformation network.

The models are incredibly sensitive to tainted data, with researchers demonstrating how poisoning just 0.001% of a training set can trigger significant medical misinformation in healthcare LLMs.

Bottom line: The cybersecurity perimeter has moved from defending networks to defending knowledge itself. This shift makes validating data provenance and continuously monitoring AI model behavior a critical responsibility for every organization deploying these tools.

Malware gets weird

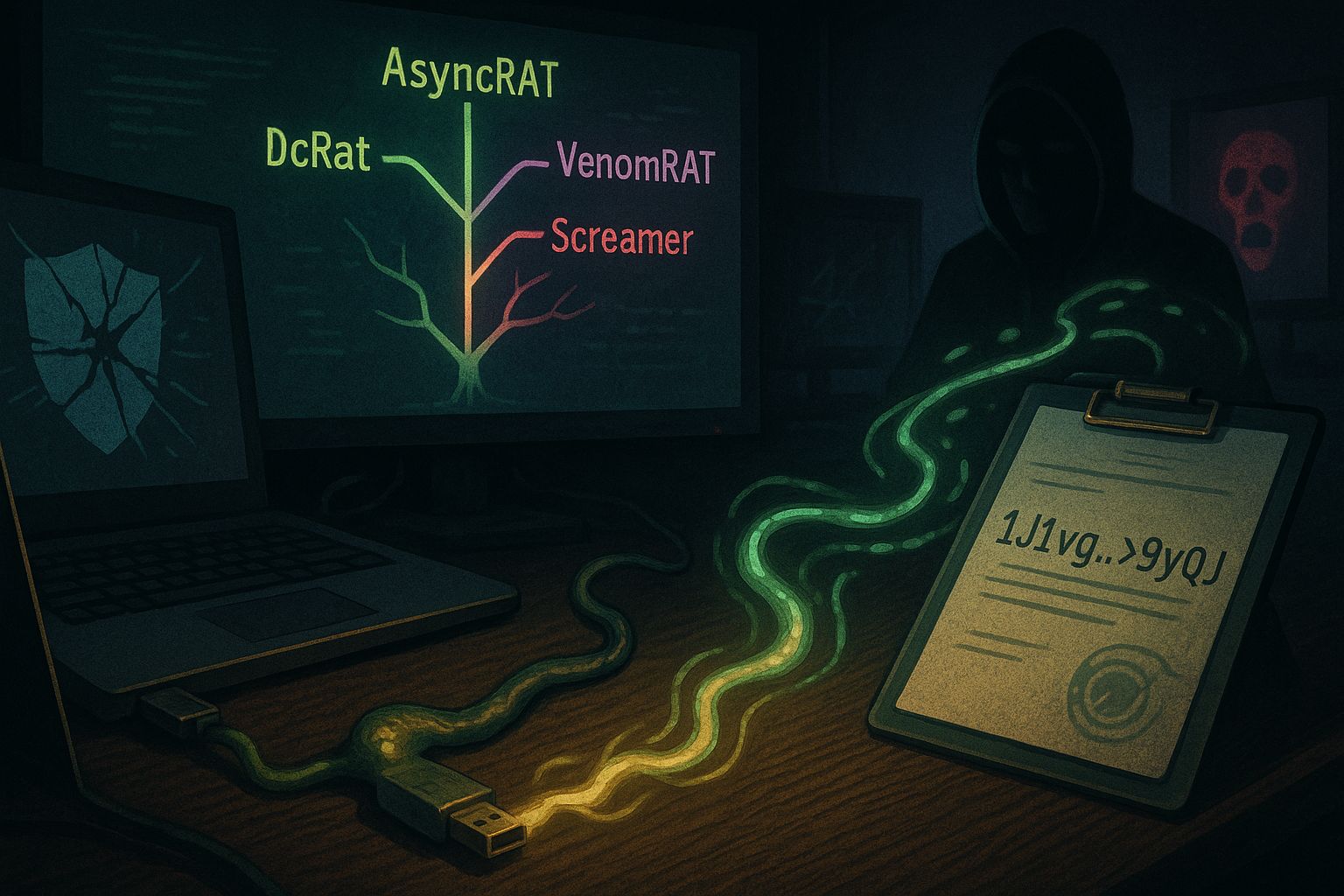

The Recap: The open-source code for the AsyncRAT remote access trojan has led to an explosion of creative and dangerous new malware variants. Attackers are forking the original code to build custom tools that are both bizarre and effective.

Unpacked:

Leading variants like DcRat and VenomRAT use advanced techniques to evade detection and actively terminate security tools on infected systems.

Some forks include unusual plugins, such as a "Screamer" that delivers jump scares and another that spreads malware through connected USB drives.

A new plugin specifically targets crypto users by monitoring the clipboard to hijack wallet addresses and reroute funds during transactions.

Bottom line: Making potent malware code open source significantly lowers the barrier to entry, enabling less-skilled actors to launch damaging attacks. This trend requires security teams to shift focus from detecting known threats to analyzing suspicious behavior to counter these rapidly evolving tools.

The Shortlist

Kafbat UI suffers from a critical RCE vulnerability (CVE-2025-49127) allowing unauthenticated attackers to execute code via unsafe deserialization in JMX services.

Phishers are weaponizing SVG image files with embedded, obfuscated JavaScript to create evasive, zero-click redirects that bypass traditional email filters.

North Korean actors expanded their "Contagious Interview" supply chain campaign, using a new malware loader called XORIndex to steal credentials and cryptocurrency wallet data via malicious npm packages.

GitGuardian launched its Model Context Protocol (MCP) Server, a new infrastructure designed to let AI agents detect and remediate hardcoded secrets directly within developer IDEs.

Feedback?

That’s a wrap for today!

Before you head out, let me know your thoughts! I’d love to hear any feedback on areas where it could be improved.

Thanks for reading,

David H.